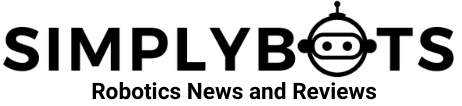

Two weeks ago, Figure announced a partnership with OpenAI to push the boundaries of AI and robot learning. Figure just released a video of Figure 01, their humanoid robot having a conversation driven by the results of this partnership.

Connecting Figure 01 to a large OpenAI pre-trained multimodal model allows for new capabilities. Figure 01 + OpenAI can now:

• Describe its visual experience

• Plan future actions and use common sense reasoning

• Reflect on its memory

• Explain its reasoning verbally

Figure’s onboard cameras feed into a large vision-language model (VLM) trained by OpenAI that understands both images and text. This model reportedly processes the entire history of the conversation (including past images) to determine language responses, which are spoken back to humans. This same model is responsible for deciding which learned, closed-loop behavior to run on the robot to fulfill a given command.